How Serverless Cloud Computing is Propelling Data Centers’ Growth?

“Is traditional data center infrastructure equipped for the demands of tomorrow?” This question echoes through the corridors of technological evolution, urging us to reevaluate conventional approaches. With the advent of Serverless cloud computing in data center, the answer becomes a resounding affirmation of change.

Serverless cloud computing emerges as a transformative force shaping the landscape of data centers. Did you know that according to Research Nester, the global serverless architecture market is anticipated to be over USD 193.42 billion by the end of 2035? This data underlines the bright future of serverless computing. Let’s embark on a journey through the corridors of serverless architecture, deciphering its significance in overcoming the limitations that have long hindered data center evolution.

A Quick Overview of Serverless Cloud Computing

Before we walk through how serverless cloud computing propels the growth of the data center, let’s first have a quick understanding of this concept.

Serverless cloud computing is a model that enables developers to focus solely on writing code without the burden of managing servers. Within this architecture, the cloud provider dynamically oversees resource allocation, seamlessly adjusting to the application’s requirements by automatically scaling up or down. It marks a significant departure from traditional Infrastructure as a Service (IaaS) or Platform as a Service (PaaS) models, where developers manually supervise server provisioning, maintenance, and scaling.

As we investigate the complexities of serverless cloud computing, let us focus on the compelling benefits this approach notably provides.

- Cost Efficiency

The cost-efficient nature of the pay-as-you-go model lies in eliminating the requirement for pre-allocated resources. Organizations exclusively incur charges for the actual computing power consumed during function execution. It presents an appealing choice for developers who prioritize cost-effectiveness.

- Scalability

Serverless architectures provide automatic scaling, ensuring applications can handle varying workloads seamlessly. This dynamic scalability is particularly beneficial for applications with unpredictable usage patterns.

- Focus on Innovation

By abstracting server management, serverless computing allows developers to concentrate on writing code and innovating rather than dealing with infrastructure concerns. It accelerates the development lifecycle.

Challenges Faced by Traditional Data Centers

Today, traditional data centers find themselves at a crossroads. Once the stalwarts of information management, these centers face a myriad of challenges in adapting to the rapidly changing technological landscape.

- Inflexibility in Scaling

Traditional data centers often grapple with the challenge of scaling. The process of accommodating growing workloads or downsizing in response to reduced demand can be time-consuming and resource-intensive. This lack of agility hampers the ability to respond promptly to fluctuating business needs.

- High Capital Expenditure

Building and maintaining traditional data centers entail significant capital expenditure. The need for physical infrastructure, including servers, networking equipment, and cooling systems, demands substantial upfront investments. This financial burden can hinder organizations, particularly smaller enterprises, from quickly adapting to evolving technological requirements.

- Resource Underutilization

Traditional data centers often suffer from resource underutilization, as they are designed to handle peak workloads. During periods of lower demand, a substantial portion of computing power remains idle. Thus leading to inefficient use of resources and increased operational costs.

- Energy Consumption and Environmental Impact

The energy demands of traditional data centers are substantial, contributing to a significant environmental footprint. Cooling systems, in particular, consume vast amounts of energy to maintain optimal operating temperatures. As sustainability becomes a global imperative, the environmental impact of these centers poses a considerable challenge.

- Complexity in Management

Managing traditional data centers involves complex tasks, from provisioning and maintenance to addressing security concerns. This complexity requires specialized skills and increases the likelihood of human errors. As a result, it potentially compromises the overall efficiency and reliability of the infrastructure.

- Security Vulnerabilities

Traditional data centers face inherent security vulnerabilities, from physical breaches to cyber threats. The centralized nature of these centers makes them susceptible to targeted attacks, necessitating robust security measures that can be challenging to implement and maintain effectively.

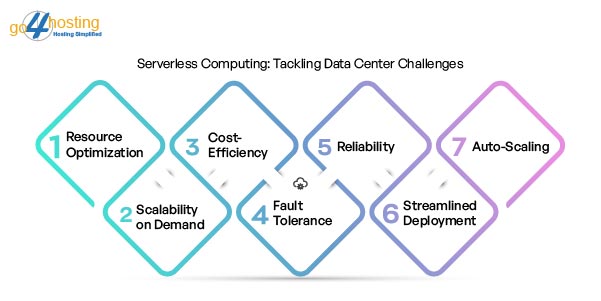

How Serverless Computing Addresses Data Center Challenges?

The intersection of serverless computing and data centers has become a focal point in addressing persistent challenges. As organizations navigate the complexities of modern computing, understanding how serverless computing directly tackles data center challenges is crucial for staying ahead in the competitive digital arena.

- Resource Optimization

Traditional data centers often grapple with resource allocation, leading to inefficiencies and increased operational costs. Serverless cloud computing in data centers introduces a paradigm shift by abstracting infrastructure management. It allows for precise resource allocation based on actual usage. This precision ensures that computing resources are allocated dynamically, optimizing performance and minimizing waste.

- Scalability on Demand

One of the key challenges in traditional data centers is scalability. Predicting future workloads can be challenging, leading to underutilization or overprovisioning resources. Serverless computing resolves this by offering automatic scalability based on demand. Whether there’s a sudden spike in traffic or a lull, serverless architecture ensures that resources are scaled precisely. It eliminates manual intervention and provides a cost-effective solution.

- Cost-Efficiency

Cost management is a perennial concern for data centers, with fixed infrastructure costs often straining budgets. Serverless computing introduces a pay-as-you-go model, where organizations only pay for the computing resources consumed. This cost-efficient approach aligns with budgetary constraints and incentivizes optimizing code for efficiency. Thus ultimately driving down operational expenses.

- Fault Tolerance and Reliability

Downtime poses a significant challenge for data centers, impacting business continuity. Serverless architectures, distributed across multiple serverless functions, enhance fault tolerance. If one function fails, others can seamlessly take over, ensuring continuous operations. This distributed approach significantly reduces the risk of system failures, providing enhanced reliability compared to traditional monolithic architectures.

- Streamlined Deployment: From Code to Execution

Deploying applications in traditional data centers can be complex, often involving intricate configuration and coordination. Serverless computing simplifies deployment, allowing developers to upload their code, and the platform takes care of the rest. This streamlined deployment process saves time and minimizes the potential for human error. Thus it contributes to a more reliable and efficient operational environment.

- Auto-Scaling with Zero Effort

Serverless computing introduces auto-scaling capabilities, ensuring that applications can handle varying workloads effortlessly. Whether it’s a sudden surge in user activity or a period of low demand, serverless platforms automatically scale resources up or down, guaranteeing optimal performance without the need for manual intervention. This dynamic adaptability is a significant asset in overcoming the rigidity of traditional data center architectures.

Wrapping Up

As we wrap up this exploration, it becomes clear that serverless cloud computing transcends mere technological progress. It signifies a paradigm shift in our approach to conceptualizing, overseeing, and expanding digital infrastructure. Within data centers, serverless cloud computing paves the way for a resilient, agile, and innovation-centric digital future. The journey persists, and with serverless cloud computing leading the way, the potential for advancements remains limitless.

FAQs

Does serverless computing use servers?

Yes, despite the name, serverless computing does use servers. However, the key distinction lies in the abstraction of server management tasks. It allows developers to focus solely on code without the burden of manual server provisioning or maintenance. The cloud provider dynamically handles server allocation, automatically scaling based on application needs in a serverless architecture.

Why is serverless computing so popular?

Serverless computing’s popularity stems from its streamlined approach, allowing developers to focus solely on code without managing servers. The pay-as-you-go pricing model enhances cost efficiency while automatic scaling meets varying workloads seamlessly. Thus making it an attractive and efficient solution for modern application development. Its simplicity, cost-effectiveness, and scalability contribute to its widespread adoption in the tech landscape.

Is serverless the next big thing?

Absolutely! Serverless computing stands on the verge of transforming the technology and data center arena, providing unmatched efficiency and scalability. With its event-driven model and pay-as-you-go pricing, it emerges as a pivotal advancement for developers and businesses, solidifying its position as the forthcoming revolution in cloud computing.