Use of smart gadgets such as smart wearable products, and smart phones to manage home appliances from remote locations or to track physical activities is the indications of how Internet of Things is slowly changing lifestyles. According to experts the present adoption level of IoT is only a precursor of amazing things to come.

Rapidly evolving technologies are going to influence multiple aspects of daily lives including health, work, and management of appliances to name a few. In terms of revenues, Gartner predicts that Internet of Things has potential to command $1.9 trillion in the next five or six years.

We need to understand implications of this from the perspective of data centers.

Need to handle huge data volumes

In one of the estimates it is predicted that by 2020, IoT will be responsible for addition of 50 billion devices that will be connected globally via Internet. Needless to mention the mammoth collection of smart devices will be accountable for generating mind boggling volumes of data. It is obvious that the data will have to be handled by data centers at some stage.

Apart from data centers, IoT associated data will also impact providers of IoT solutions, suppliers, partners, and organizations that will be embracing wearable or IoT technologies.

The major adoption of IoT will be experienced in manufacturing, consumers, and industries in government verticals and subsequently spread across many more verticals. This will force enterprises to find ways to process large data volumes, thanks to extensive adoption of IoT.

Significance of ensuring availability and security of IoT

Proliferation of IoT specific connected devices will enhance concerns regarding their security and availability. There will be devices that will not require extreme level of security such as a water dispenser that sends out a message that it is due for refilling. Hackers cannot inflict any significant damage by hacking the water dispenser however we need to also consider a possibility of hackers accessing and damaging devices that are managing mission critical applications.

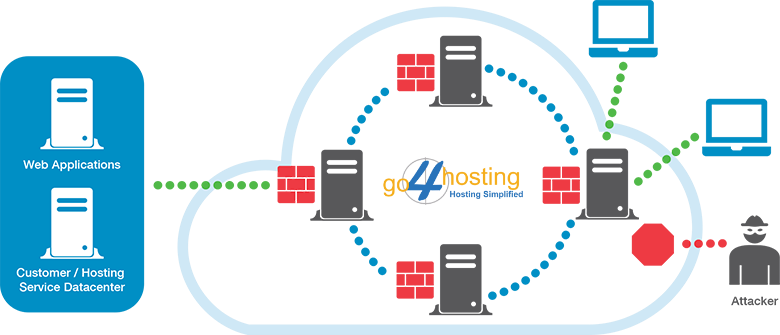

This leads to a probability of sensitive data managed within closed and secure walls of data centers while adopting public cloud for innocuous devices and less significant data.

Second important consideration is about availability of connected devices. Any downtime can cause great hassles depending on the application area. If an IoT device in a chemical manufacturing plant that handles continuous process goes down, then there will be huge loss of the batch under process.

Significance of data generated by IoT

It should be appreciated that the major value of IoT would be associated with massive volumes of data generated by connected devices underlining importance of accessing Big Data analytics that would require computers with large compute power. Needless to mention such systems will have to be backed by connectivity, power, cooling and space in order to deliver results.

Another important attribute of IoT data will be its dynamic nature that will be due to the fact that there will be constant generation of data and the same will have to be shared with an assortment of partners or would be processed for the use by multiple services. The data can also be combined with other forms of data for delivering greater data insights. In any case there will be a need to design different connectivity options for assuring secure as well as direct access to suppliers and partners.

On should also address issue of latency in case of certain applications that can impact user experience with extended lag times. This explains the need to maintain close proximity of data and infrastructure in relation with end users as well as devices. You will also have to ensure seamless connectivity with digital supply chain and trading partners.

As mentioned earlier, IoT applications will need to be managed by using combination of private as well as public cloud depending on the vulnerability and importance of devices. This is definitely going to put strain on data center and cloud capabilities. This will be more evident in regions that are situated around metropolitan towns, major events, manufacturing and industrial hubs and so forth.

Importance of data center facilities for IoT implementation

In spite of the fact that it is still early days to foretell precise materialization of the landscape in terms of Internet of Things, one thing is certain that data centers will have to ensure significant participation in the new scenario that will be dominated by IoT.

Due to dynamic nature of IoT, the proposed data processing facilities will have to take into account existing needs and future requirements of connectivity, scalability, security, and flexibility. This underlines need for CIOs to plan strategies in such a way that the current and future needs are properly balanced while designing data center infrastructure. The most visible impact of IoT will be seen in data center India facilities that need to be prepared to play their significant role.