Cloud Load Balancing – What You Need to Know?

A large number of people visit a website. A web application’s ability to handle all of these user requests at once becomes tough. It could even cause system failures. The terrible sense that a website is down or not accessible results in lost prospective clients for a website owner. Cloud load balancing can be a game-changer in such a situation.

Although the shifting of applications to the cloud from legacy structures of data centers continues to gain traction, server load balancing remains an integral component of the core of IT infrastructures. Irrespective of the type of servers, including temporary, permanent, virtual, or physical, workloads will always be required to be intelligently distributed throughout the entire gamut of servers.

What is Cloud Load Balancing?

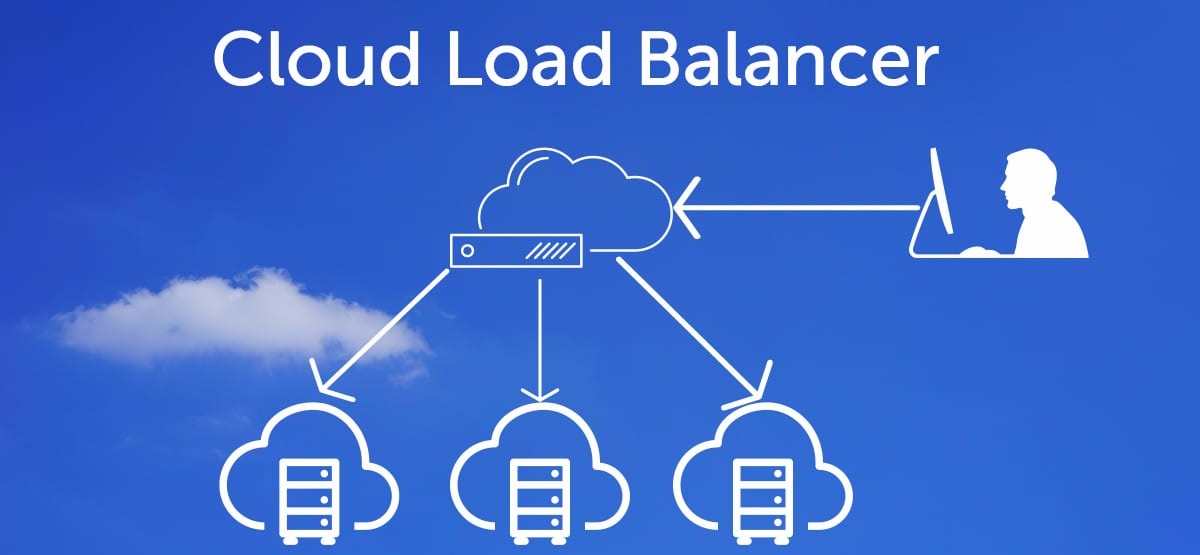

Cloud servers load balancing is a software-based load balancing service that distributes traffic between numerous cloud servers. Like physical load balancers, cloud load balancers design to manage large workloads so that no single server is overburdened with requests, causing delay and disruption.

However, the process of distributing workloads across a myriad of hybrid infrastructures, data centers, and clouds is highly daunting. It usually culminates in a poor distribution of workloads and deterioration of application performance. Thus, it underlines the growing need for GSLB or Global Server Load Balancing.

Load Balancing From The Cloud’s Perspective

Load balancers are aptly known as Application Delivery Controllers or ADCs. These design to appropriately disperse workloads to achieve optimum consumption of collective server capacity so that the applications continue to perform efficiently.

Organizations have been leveraging hardware-based Applications. Delivery Controllers efficiently distribute workloads across the gamut of backend servers. Traditionally, the load balancing scenario has been dominate by Radware, Kemp Technologies, F5, and Citrix and is considered perfect resources in traditional environments of data centers.

The more recent Application Delivery Controllers from the same vendors based on software compared to their legacy counterparts were hardware intensive. These include Amazon ELB, Nginx, and HAProxy, facilitating organizations to move more applications to a cloud environment. This transition aligns with the evolution towards efficient cloud load balancer systems.

There are two fundamental approaches to leveraging multi-cloud Global Server Load Balancing techniques. The first approach covers essential traffic management by using legacy managed DNS providers, which involves simplicity of usage and remarkable cost efficiency.

Need For a Better Solution

However, this approach lacks in superior ability to manage traffic and enables only a few capabilities, including geo routing and round-robin DNS. Additionally, these approaches cannot avert improper distribution of workloads simply because instead of executing traffic routing based on real-time workloads by considering the data center’s capacity, these approaches depend upon fixed and static rules.

To clarify further, consider the example of geo routing, which ensures appropriate distribution of user requests or workloads to the nearest data center but doesn’t account for spikes, outages, or geographical distribution of users. This aspect highlights the significance of cloud load balancing for managing varying workloads, addressing surges, and ensuring efficient user access despite geographical disparities.

The more innovative approach involves leveraging DNS devices, which are purpose-built for seamless integration with Application Distribution Controllers for improving upon the shortcomings of the first approach.

Several businesses may find certain drawbacks in this strategy, notably the need for capital-intensive network gear. Moreover, implementing high-performance, albeit cost-prohibitive, network appliances for global load balancing on a large scale is challenging. This includes catering to mega-scale requirements, especially in scenarios like cloud load balancing where a single data center with DNS capabilities may fall short.

By hosting DNS at a data center, there is an additional point of failure since DNS is exceptionally vulnerable to DDoS attacks, which are not easy to handle. Moreover, there is a need to empower DNS with 100 percent availability beyond most enterprises’ capacity.

Therefore, many organizations deploy their own data center load balancers instead of relying on Global Server Load Balancing features offered by load-balancer providers. The deployed data center load balancers effectively substitute by managed GSLB functionality, based on cloud computing for intelligent traffic management by leveraging real-time telemetry offered by load balancers.

Delivering GSLB Via Cloud

The most efficient delivery model of Global Server Load Balancers is via a cloud-based managed service. A multi-cloud global server load balancing or hybrid cloud infrastructure is used by many applications worldwide.

An ideal GSLB service must be capable of redirecting workloads away from POPs that are already over-burdened with requests. The right GSLB solution should avert the overloading of POPs. It requires efficient detection of conditions that cause the overloading and may be due to capacity loss or spikes in demands. A hybrid cloud load balancer allows improving network performance while avoiding the high costs of traditional load balancing hardware.

Disparities of Application Demand Controllers that involve open source and commercial solutions in hybrid architectures need GSLB services to feature an available interface that can facilitate real-time data collection.

In addition to showcasing globally available features, an exemplary Cloud Load Balancer (GSLB) service must ensure above-average efficiency for global traffic management. GSLB operates without CAPEX or OPEX. Supporting this requires redundant infrastructure, ensuring the cloud-based GSLB infrastructure delivers real-time capabilities.

Conclusion

We can expect to acquire proprietary Application Demand Controller solutions while efficiently managing traffic on a global scale with the help of reliable GSLB capabilities. Furthermore, by combining the two approaches mentioned in this article, it is possible to offer a gratifying and consistent user experience. If you wish to know more global load balancing or how it can be beneficial for you, please feel free to contact Go4hosting.